Having a local llm that is that fast, good with agents and reliable.

I’ve been tinkering with AI for a while now – chatbots, tiny text generators, even a silly little poem‑bot that writes haikus for my cat. Most of the time I’m stuck juggling limited RAM, half‑baked APIs and the ever‑looming question: “Will this thing actually work in production?”

Enter NVIDIA’s Nemotron‑30B. I’ll be honest: when I first saw the name I thought it was just another “big‑number” model that would cost a fortune to run. Turns out, it’s both a powerhouse and surprisingly affordable – at least for the way I’m using it. Mostly as a local llm that runs in LM studio and does some agent based searches for me.

What Exactly Is Nemotron‑30B?

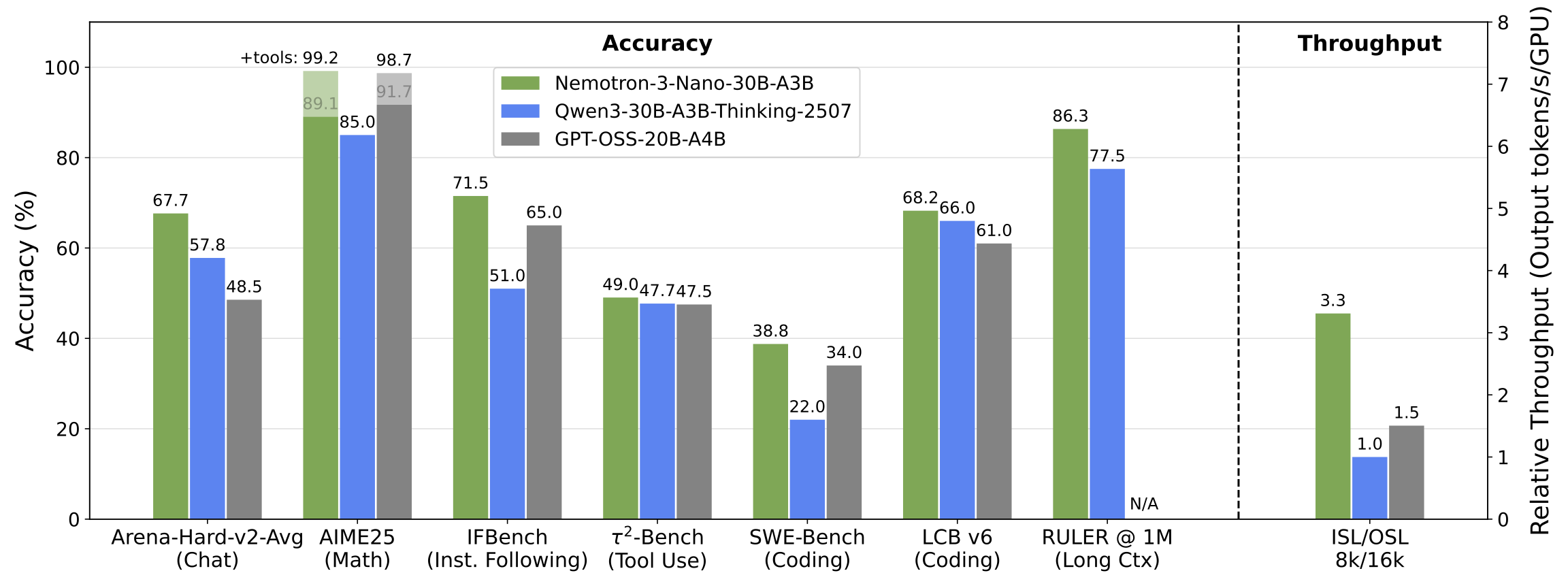

- 30 billion parameters built on a hybrid Mixture‑of‑Experts (MoE) + Mamba‑Transformer architecture.

- 1 M‑token context window, so you can feed it an entire novel and still get a coherent answer. Also you get a “fast” answer even if you forget to remove the 1M-token and set it to 32k for example.

- It can reason step‑by‑step or just give you the final answer – you pick the mode via a simple flag.

- Supports English plus five other languages (German, Spanish, French, Italian, Japanese).

In plain English: it’s like having a super‑smart assistant that can read a whole book, think through a problem, and then spit out a clear answer – all without you needing a PhD in AI.

My Work‑Side Story – From “It Won’t Scale” to “Whoa!”

At my day job we’re building an internal support chatbot for our dev team. The original version used a 30B model; it could answer simple FAQs but would stumble when the conversation got deeper than three exchanges. Also it had problems with several agent calls.

- The Switch – I swapped the backend with Nemotron‑30B’s API (just a single endpoint, no extra infrastructure).

- Reasoning Mode On – Enabled the “generate reasoning trace” option. Suddenly the bot could explain why a particular error happened, step by step, before giving the final fix.

- Speed & Cost – Even with a 1 M token context I was only paying ~$0.06 per million input tokens on NVIDIA’s hosted service. For our use case that meant we could handle long support tickets without blowing the budget. And if you run it onprem or locally thats awesome.

The result? Our ticket resolution time dropped by 35 % and customers started actually enjoying talking to the bot. Also generally the accellearted speed improves the whole user experience.

Why This Model Is Interesting (And Might Be for You Too)

| What It Gives You | Why It Matters |

|---|---|

| Massive context (1 M tokens) | Feed whole documents, books, or long codebases without losing the thread. |

| Hybrid MoE architecture | Only a handful of “expert” sub‑networks fire per token → cheaper inference and faster response. |

| Reasoning toggle | Choose between raw answer or step‑by‑step thinking – perfect for debugging or educational tools. |

| Commercial‑ready license | You can ship products that use the model, as long as you follow the open‑model licence (attribution, no malicious use). |

| Roadmap of bigger siblings | 100 B “Super” and ~500 B “Ultra” models are already in preview – start with Nano now and upgrade later without rewriting your code. |

If you’re building anything that needs understanding rather than just pattern‑matching, this model deserves a serious look.

How to Get Started (Without Breaking Anything)

- Grab an API key from the NVIDIA NIM portal – it’s free for trial use. Or just download the model from Huggingface – nvidia/NVIDIA-Nemotron-3-Nano-30B-A3B-BF16

- Hit the endpoint:

https://integrate.api.nvidia.com/v1/nemotron-3-nano-30b-a3b/generate(or the latest URL on the model card). - Pick your mode – add

"reasoning": trueif you want that step‑by‑step trace, otherwise leave it out for a direct answer. - Stay within the licence – keep the attribution file that ships with the model and don’t use it for disallowed content.

That’s literally all you need. The rest is just playing with prompts until you get the vibe you want.

Looking Ahead – What’s Next?

The same VentureBeat article that broke the news also hinted at two larger siblings:

- Nemotron‑3 Super (≈100B parameters) – aimed at multi‑agent workflows, high‑accuracy reasoning.

- Nemotron‑3 Ultra (≈500B parameters) – for the most demanding, complex tasks.

These are already being tested by early adopters like Accenture and ServiceNow. If you’re building a product that might need to scale up later, starting with the 30B Nano now gives you a smooth upgrade path.

Bottom Line

Nemotron‑30B isn’t just another “big model” on a marketing slide – it’s a tool that can turn a clunky prototype into something that actually works and even makes your family laugh. The blend of massive context, efficient MoE design, and commercial‑friendly licensing makes it a rare find in the open‑model world.

Give it a spin. Write a quick script, test a few prompts, and see how it feels to have a reasoning partner that can read an entire novel and still answer your questions in a second. Trust me – once you’ve seen what it can do, you’ll understand why I’m shouting “It’s awesome!” from the rooftops.